Greatest CPUs in Each Generation

I thought I would throw out a somewhat controversial page that summarises the best-performing CPU in each generation, including some potential pitfalls and challenges if you choose to use them. Because... why not? These days there are so many choices when building a retro gaming PC, or building a period-correct PC from a certain year. Because of this, you may choose a fair to middle-performing chip because it suits your needs. This page identifies what you might pick if you really wanted to push a PC from a given CPU generation to its absolute limit.

I am not including overclockability in this - we're talking stock CPUs that either came in a retail-bought PC or were available to buy for self-builders.

8088/8086 (1st Generation)

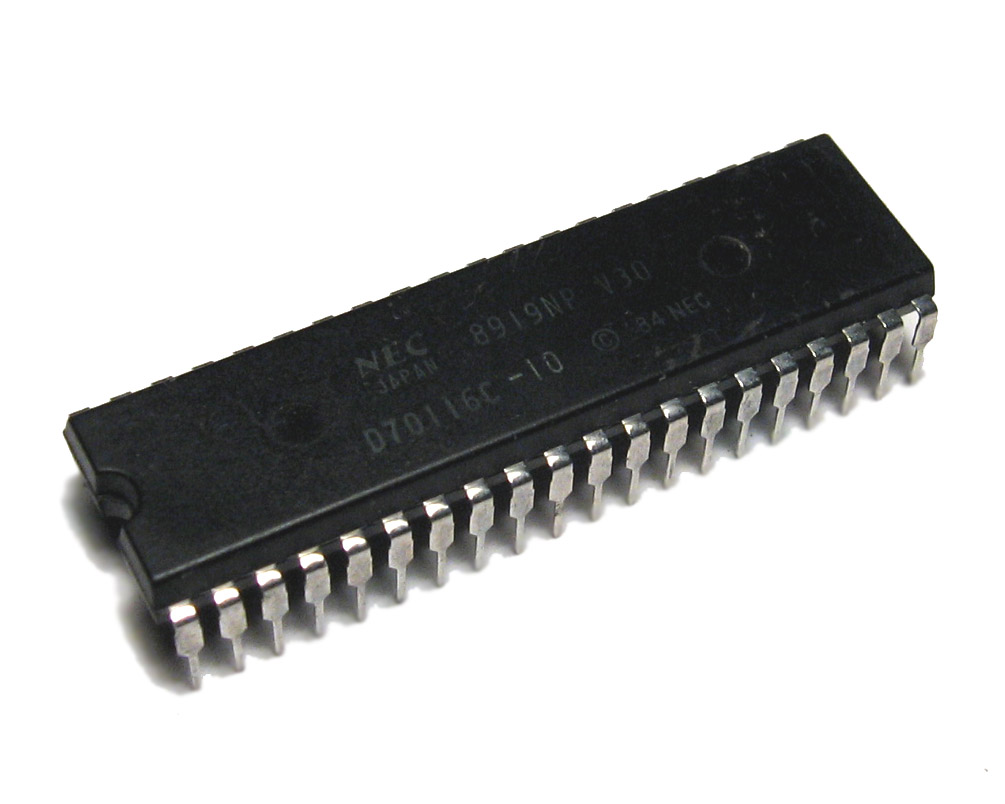

There wasn't much around to help boost the performance of the venerable 8088 or 8086, apart from a minor upgrade in running NEC's clone microprocessors, the V20 and V30 respectively. These had slightly more efficient internal operations whilst retaining 100% compatibility with their Intel siblings. In real world performance, it was barely noticeable but in synthetic benchmarks you could see between 10% and 20% improvement at the same clock speed. Note that you cannot replace an 8088 with a V30, nor an 8086 with a V20 - 8088/V20 can only use an 8-bit external data bus whilst 8086/V30 are 8-bit internally like the 8088, but use a 16-bit external data bus. 8086-based PCs typically got about 5% extra performance in benchmark tests over 8088 ones - gain, not at all noticeable in real world scenarios.

There wasn't much around to help boost the performance of the venerable 8088 or 8086, apart from a minor upgrade in running NEC's clone microprocessors, the V20 and V30 respectively. These had slightly more efficient internal operations whilst retaining 100% compatibility with their Intel siblings. In real world performance, it was barely noticeable but in synthetic benchmarks you could see between 10% and 20% improvement at the same clock speed. Note that you cannot replace an 8088 with a V30, nor an 8086 with a V20 - 8088/V20 can only use an 8-bit external data bus whilst 8086/V30 are 8-bit internally like the 8088, but use a 16-bit external data bus. 8086-based PCs typically got about 5% extra performance in benchmark tests over 8088 ones - gain, not at all noticeable in real world scenarios.

The only thing I would say is get the fastest clock speed one you can - the original IBM 5150 was incredibly slow, running at just 4.77 MHz. Mid-to-late 80s "XT clones" typically got a turbo button that could reduce the clock speed down to 4.77 MHz, but with it off they would run at either 8, 10 (officially 9.54 MHz, twice that of the IBM 5150) or even 12 MHz! The NEC V20 and V30 were eventually manufactured up to 16 MHz (these were called V20HL and V30HL), but by the time these speedy CPUs were on the market, we were mostly into our 386s, so it's pretty rare to find an XT-class PC running anything faster than 10 MHz.

Also, with faster clocks there are problems with the ISA bus being driven off the same crystal oscillator - there was no bus divider in these days, so all expansion cards would be forced to run at precisely the same clock speed as the CPU - the same for the math coprocessor, and those didn't make good overclockers!

So the NEC V30 takes the cake as the fastest "stock" XT CPU if, and its a big 'if', you can get it to run stably at 16 MHz with all your expansion cards or somehow come up with a way to bus-divide so your ISA bus can tick along at a steady 8 or 10 MHz while the CPU and FPU run at 16 MHz. Without achieving a different bus speed, my advice is to use the highest quality ISA expansion cards you can - my very limited tests proved for instance that VGA cards from Tseng Labs took to an overclocked ISA bus much better than those from Trident. One key factor with graphics cards is to be sure they have the fastest video RAM possible - 70ns or faster.

80286 (2nd Generation)

Intel only produced the 80286 up to 12.5 MHz, but other authorised manufacturers continued to push the architecture from 16 MHz up to a blistering 25 MHz! It was Harris Semiconductor, later bought by Intersil, who continued to develop the 286 well into the 386-era. Intel's 386DX was too expensive to buy for many, and Intel refused to licence their new precious 386 chip design to anyone else, leaving these "second source" companies like AMD, Siemens, and Harris out on a limb with the venerable 286 until AMD and Cyrix managed to [legally] reverse-engineer the 386. Still, the Harris 80C286-25 is quite the powerhouse in the 16-bit PC world, so much so that when put up against the also 16-bit 386SX (not the 32-bit 386DX), it was pretty much the same real-world performance clock for clock.

Intel only produced the 80286 up to 12.5 MHz, but other authorised manufacturers continued to push the architecture from 16 MHz up to a blistering 25 MHz! It was Harris Semiconductor, later bought by Intersil, who continued to develop the 286 well into the 386-era. Intel's 386DX was too expensive to buy for many, and Intel refused to licence their new precious 386 chip design to anyone else, leaving these "second source" companies like AMD, Siemens, and Harris out on a limb with the venerable 286 until AMD and Cyrix managed to [legally] reverse-engineer the 386. Still, the Harris 80C286-25 is quite the powerhouse in the 16-bit PC world, so much so that when put up against the also 16-bit 386SX (not the 32-bit 386DX), it was pretty much the same real-world performance clock for clock.

One of the first challenges you may notice when looking for a suitable 80286 motherboard is that the vast majority of them had soldered-in CPUs (ugh, but it was cheaper to manufacture), and since these were surface-mount chips, they're pretty tricky to get desoldered and replaced with a socket unless you have advanced soldering skills. My personal feeling is that these boards should be left alone... 286 motherboards are not particularly common these days, and the risk of damaging one is just too great. I would go as far as to argue that any 286 motherboard that's not got its 80286 in a socket isn't capable of running at a faster clock than the CPU you have buried in the PCB - its purely down to economics - they cheaped out by not putting a socket on (read this as 'they don't want you upgrading'), so the other components on the board will likely not tolerate any meddling either. Basically, if you want to try the Harris 286-25, get a motherboard that has a processor socket.

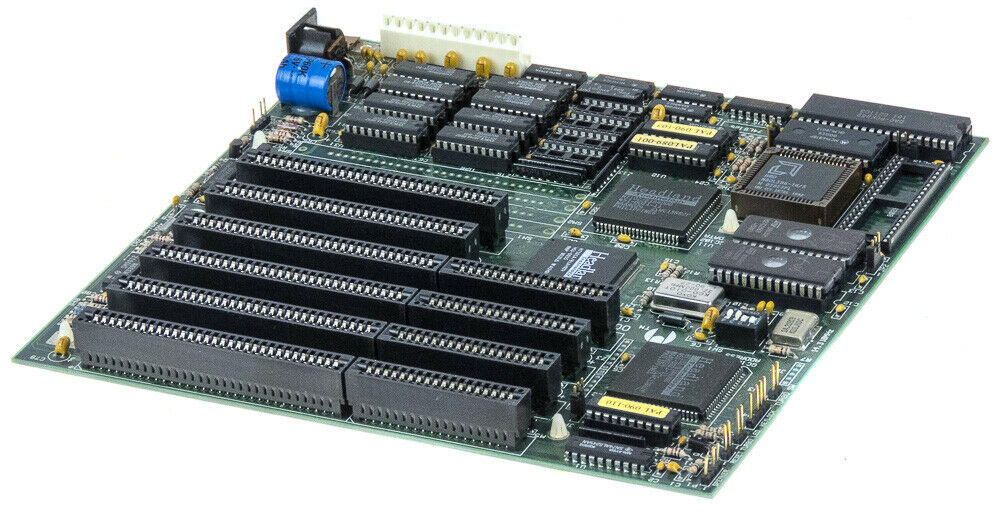

Another critical thing to watch for on 286 motherboards is that the CPU clock is often tied to the bus and memory clocks, so replacing the CPU's crystal oscillator from say, 24 MHz which would run the 80286 at 12 MHz, to a 40 MHz crystal to run an 80286-20 will increase the bus/memory clock also. The ISA bus typically runs at 8 MHz, but good expansion cards can be driven on this bus at 10 MHz without problems, sometimes even 12 MHz. Issues arise when the BIOS doesn't allow a divide by 4 option for the bus or memory to reduce these down to run at nice cool 10 MHz - instead /3 is usually about as much as you'll find, so the bus will be forced to run at an over-the-top 13.3 MHz. This would result in stability problems if the machine boots at all. Later chipsets from VLSI, Headland Technology and SARC decoupled the CPU clock from the ISA bus and memory clocks, which allowed for more flexibility and tinkering with higher frequency CPUs whilst keeping the ISA bus and memory speeds down to normal speeds (this of course then creates a bottleneck in overall system performance as the ISA bus is running so much slower than the CPU, but at least it's running and is stable!). Look for these on your 286 mobo shopping list.

One motherboard known to support the Harris 80C286-25 is the ZIDA/Tomato TD60C Baby AT motherboard.

Another couple of motherboards known to support at least the Harris 80C286-20 is the Octek Fox II, which runs the Headland Technology "HT12" chipset, and the Ilon M-209 which uses a Siemens 82C206 chipset. The HT12A chipset also supports these CPUs, but is only stable up to 20 MHz apparently. The same is true of the Advanced Computer Technology (ACT) A27C001 chipset (a rebranded Texas Instruments TI TACT8230) and the SARC RC2015 - good up to 20 MHz. The VLSI 5-chip chipset consisting of 9002AV/9010BV/9012BV/9015BV/9023AV runs the 25 MHz variant using a 100 MHz CPU crystal with a /4 divider. I'm not sure about Suntac ST6x chipset, nor the VLSI 894x/895x chipset.

Another couple of motherboards known to support at least the Harris 80C286-20 is the Octek Fox II, which runs the Headland Technology "HT12" chipset, and the Ilon M-209 which uses a Siemens 82C206 chipset. The HT12A chipset also supports these CPUs, but is only stable up to 20 MHz apparently. The same is true of the Advanced Computer Technology (ACT) A27C001 chipset (a rebranded Texas Instruments TI TACT8230) and the SARC RC2015 - good up to 20 MHz. The VLSI 5-chip chipset consisting of 9002AV/9010BV/9012BV/9015BV/9023AV runs the 25 MHz variant using a 100 MHz CPU crystal with a /4 divider. I'm not sure about Suntac ST6x chipset, nor the VLSI 894x/895x chipset.

Be aware that Biostar boards with the VL82C310/VL82C311/VL82C311L chipset from VLSI with only run at 25 MHz for 386SX CPUs - they are limited in 286 mode to 20 MHz.

The general rule of thumb when looking for a solid 286 motherboard that can run the higher clock speed CPUs, is to look for the smallest form factor motherboards (like in the picture above, the Octek Fox M 286) which have more tightly-integrated chipsets, like one or two chipset-chips, not the 5-plus ones apart from the VLSI one mentioned earlier, or those that don't even have SIMM memory slots or SIPP pin headers, which are a telltale that it's earlier in the 80286's timeline.

For more technical goodness on the Harris 80C286, here's the datasheet.

80386 (3rd Generation)

The title of fastest 3rd-generation CPU has to go to AMD for their Am386DX-40. Couple this with an IIT3C87-40 math coprocessor, and you have a super-sweet 386 that's just about on par with an Intel 486 running at 25 MHz.

The title of fastest 3rd-generation CPU has to go to AMD for their Am386DX-40. Couple this with an IIT3C87-40 math coprocessor, and you have a super-sweet 386 that's just about on par with an Intel 486 running at 25 MHz.

Now one could argue that a CPU could be considered 3rd-gen if it's pin-compatible with 386 motherboards, right? Well not in my opinion, but let's go with it and see what happens...

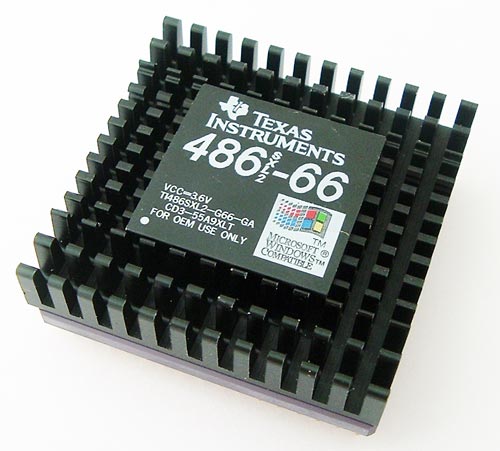

The IBM 486BL (sometimes called 486DLC/DLC2/DLC3/DLC4) "Blue Lightning" gets the trophy for the fastest CPU that will work in a 386 motherboard. This was a clock-tripled evolution of the Cyrix Cx486DLC-33 first introduced in 1992, which was a reverse-engineered 80386 core but with all 486SX instructions added (which in my book makes it a 4th generation CPU), plus a 16 KB L1 cache - twice the size of the one in the Intel 80486! In second place would be Texas Instruments' variant of the same Cyrix Cx486DLC, the TI486SXL2-G66 which got an 8 KB L1 cache. As you can probably guess, this was a clock-doubled Cx486DLC-33.

The IBM 486BL (sometimes called 486DLC/DLC2/DLC3/DLC4) "Blue Lightning" gets the trophy for the fastest CPU that will work in a 386 motherboard. This was a clock-tripled evolution of the Cyrix Cx486DLC-33 first introduced in 1992, which was a reverse-engineered 80386 core but with all 486SX instructions added (which in my book makes it a 4th generation CPU), plus a 16 KB L1 cache - twice the size of the one in the Intel 80486! In second place would be Texas Instruments' variant of the same Cyrix Cx486DLC, the TI486SXL2-G66 which got an 8 KB L1 cache. As you can probably guess, this was a clock-doubled Cx486DLC-33.

Now for difficulties... the biggest challenge is getting hold of one of these early IBM Blue Lightning CPUs, since IBM were only permitted to sell them in their own PCs, and appear to be very rare. Don't be confused between these "Blue Lightning" CPUs and the later, more common true 4th-gen CPUs with the same moniker - they are completely different! Even the TI chips aren't common, but search long enough and you can pick up a TI chip much more readily.

More details on all of these hot 386s can be found on the CPU Upgrade Options page.

80486 (4th Generation)

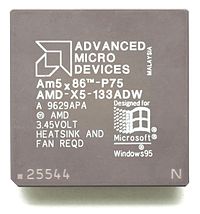

The 486-era saw a lot of competition in the CPU market, with AMD, Cyrix and others going up against the mighty Intel in a big way. AMD's X5 CPU and the Cyrix/IBM 5x86 drove up clock speeds beyond Intel's final 486 speed of 100 MHz, with both AMD and Cyrix/IBM getting up to 133 MHz in stock form on their 4th-gen chips.

The 486-era saw a lot of competition in the CPU market, with AMD, Cyrix and others going up against the mighty Intel in a big way. AMD's X5 CPU and the Cyrix/IBM 5x86 drove up clock speeds beyond Intel's final 486 speed of 100 MHz, with both AMD and Cyrix/IBM getting up to 133 MHz in stock form on their 4th-gen chips.

Launched in November 1995, the AMD 5x86 was basically a fast Am486-DX4 with a preset clock multiplier of 4x over the Am486DX4's 3x. The fastest variant of the 5x86 was the X5-133ADW - it's a fantastic, stable, and overclockable all-rounder and is widely-supported on Socket 3 motherboards. It takes top spot here for the 4th generation!

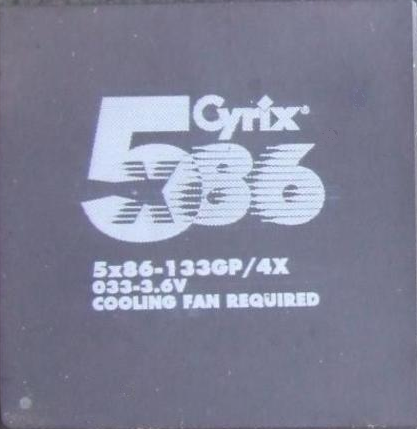

The runner up is the Cyrix 5x86 which launched a little earlier in August 1995. Codenamed "M1sc", this was a 'lite' version of their upcoming M1 core which would later be branded 6x86. It apparently got about 80% of the performance of its soon-to-be big brother, but in a Socket 3-compatible package. Since its internals were much closer to 5th-generation CPUs like the AMD K5 and Intel Pentium, it's a bit of a cheat to have the Cyrix included on my podium here for the fastest 4th-gen CPU. Still, the Cyrix 5x86-133 (or the IBM-branded version, 5x86C-133) is an interesting chip. It works on a number of 486 motherboards, though the motherboard must specifically support the Cx5x86/M1, so check the motherboard manual first! It's also important to remember that with these 5x86 CPUs, you should download and use the Cyrix CPU utilities to re-enable some of its features to get the most out of it. These are widely available on the internet for free.

The runner up is the Cyrix 5x86 which launched a little earlier in August 1995. Codenamed "M1sc", this was a 'lite' version of their upcoming M1 core which would later be branded 6x86. It apparently got about 80% of the performance of its soon-to-be big brother, but in a Socket 3-compatible package. Since its internals were much closer to 5th-generation CPUs like the AMD K5 and Intel Pentium, it's a bit of a cheat to have the Cyrix included on my podium here for the fastest 4th-gen CPU. Still, the Cyrix 5x86-133 (or the IBM-branded version, 5x86C-133) is an interesting chip. It works on a number of 486 motherboards, though the motherboard must specifically support the Cx5x86/M1, so check the motherboard manual first! It's also important to remember that with these 5x86 CPUs, you should download and use the Cyrix CPU utilities to re-enable some of its features to get the most out of it. These are widely available on the internet for free.

Pentium, 6x86, K5 (5th Generation)

In the fifth generation of chips I am grouping together the early Pentiums (P5 and P54C), the Cyrix 6x86 (M1), and the AMD K5.

In the fifth generation of chips I am grouping together the early Pentiums (P5 and P54C), the Cyrix 6x86 (M1), and the AMD K5.

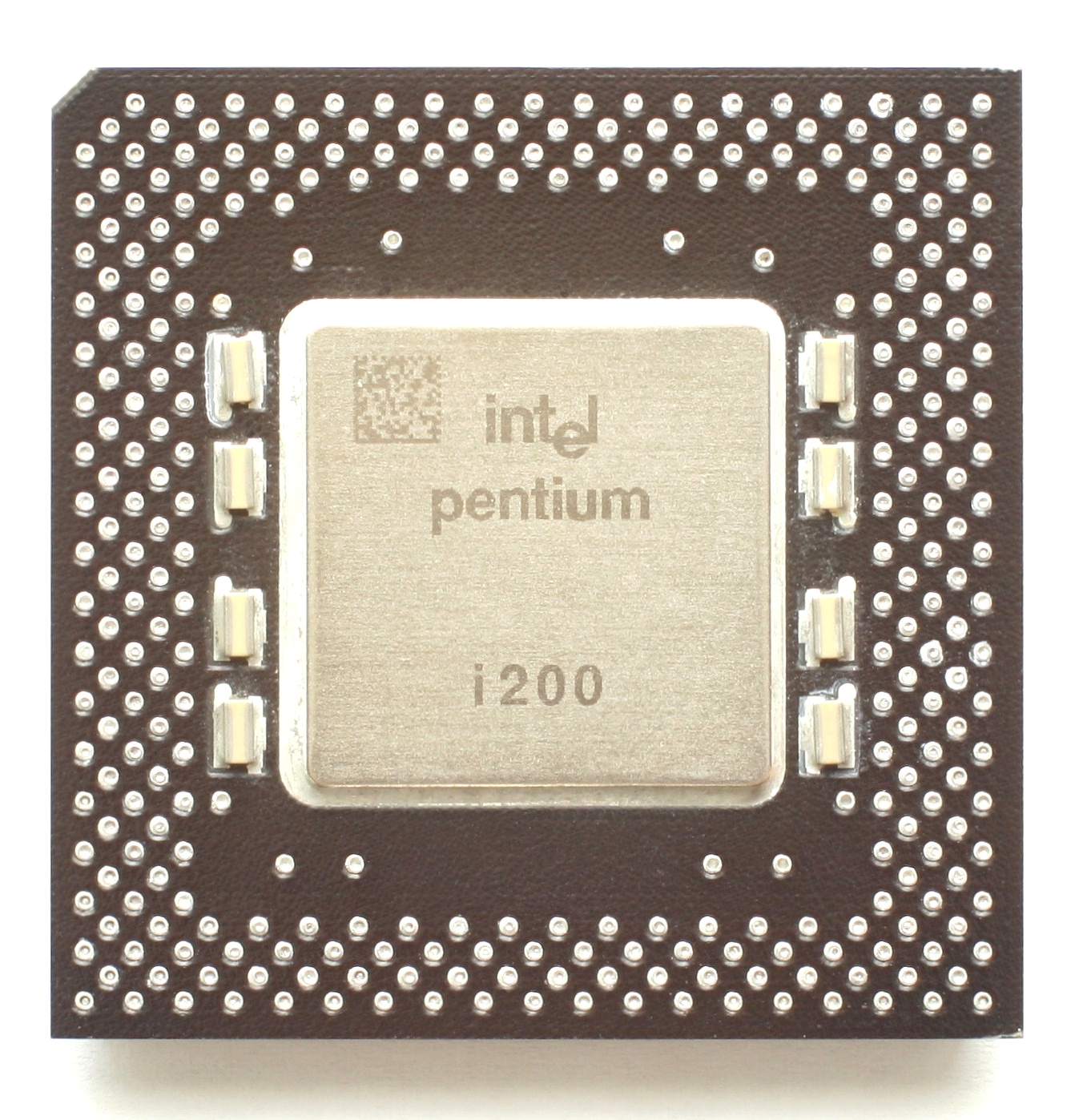

The second batch of Pentiums, known as P54C, reduced CPU core voltage from 5V down to 3.3V which meant they ran at a lower temperature, and were thus able to reach higher clock speeds. These 3.3V chips maxed out with a 200 MHz version.

The Cyrix 6x86 isn't 100% Pentium-compatible so it reports itself to software as a 486. However, it's still a very fast CPU, with integer performance far outstripping a Pentium P54C clock for clock, but floating point operations are worse. The highest frequency 6x86 was the 150 MHz IBM variant which got a PR (Pentium Rating) of P200+.

Finally, the AMD K5 also achieved a PR of 200 in the form of the AMD K5-PR200 which actually ran at a leisurely 133 MHz. It's a Socket 5 CPU that runs on 3.3V.

Looking at real-world performance between these three high-end 5th-generation processors, there's not that much in it. For raw integer performance, the top score goes to the Cyrix 6x86L 150 MHz. The best for floating-point performance is the Pentium P54C-200 MHz, coming in around 10% faster than the Cyrix. The AMD K5 takes last place here with integer performance about 7% slower and about 15% slower in floating point performance compared to the Pentium. Comparing it to the Cyrix, It's 10% slower on integer performance but 3% faster at floating point.

Overall, this is a tough call as the Cyrix's integer performance puts the Pentium to shame, so in certain real-world uses that would be evidently faster, but due to its lack of Pentium compatibility, I'm giving the Pentium 200 MHz the #1 spot, followed by the Cyrix/IBM 6x86L 150 MHz, and the AMD K5-PR200 in 3rd place.

Pentium II, 6x86MII, K6-2 (6th Generation)

During the 6th generation which lasted around 3 years from 1995 to 1998, clock speeds went through the roof. From the Pentium MMX which ran at a maximum clock of 266 MHz, up to the mighty AMD K6-2 which topped out at 550 MHz.

During the 6th generation which lasted around 3 years from 1995 to 1998, clock speeds went through the roof. From the Pentium MMX which ran at a maximum clock of 266 MHz, up to the mighty AMD K6-2 which topped out at 550 MHz.

The K6-2 550 performs 2x that of the fastest that Cyrix could offer, the Cyrix 6x86MII-433, so by 1998 Cyrix really was out of the running. Also up against the K6-2 550 was Intel's Pentium II whose fastest clock speed was 400 MHz.

.jpg) As you would expect, the AMD's faster clock gives it 1st place here, running at about 135% of the performance of the fastest Pentium II. In terms of real-world performance, however, during this time dedicated 3D graphics accelerators were all the rage, and so for gaming there was a dramatic shift away from CPU performance being the focal point to GPU performance being much more critical for modern gaming. No longer was it pivotal what CPU you had - rather, whether you had a 3dfx Voodoo, ATi Rage, or nVidia Riva!

As you would expect, the AMD's faster clock gives it 1st place here, running at about 135% of the performance of the fastest Pentium II. In terms of real-world performance, however, during this time dedicated 3D graphics accelerators were all the rage, and so for gaming there was a dramatic shift away from CPU performance being the focal point to GPU performance being much more critical for modern gaming. No longer was it pivotal what CPU you had - rather, whether you had a 3dfx Voodoo, ATi Rage, or nVidia Riva!

Sadly this was also when you had to choose which horse to back - Intel or AMD. Intel had moved to the Slot 1 architecture with the introduction of Pentium II, while AMD continued with [Super] Socket 7.

Pentium III, K6-III+ ("6.5th" Generation)

Yes, this is weird, as it's not really 7th generation. We're still talking Slot 1 and Super Socket 7, but it's not really about the slot or socket (I am ignoring Xeon servers here which used P-III in Slot 2 form) - it's about the evolution of CPU architecture - both Intel's P-III and AMD's K6-"Plus" range didn't really move the needle - they were at best a small improvement on what came before - a stop gap, if you will.

Intel simply threw in SSE instructions into a Pentium II and badged it Pentium III. The first P3s were produced on a 0.25 micron manufacturing process, but 8 months later they released their first 0.18 micron CPUs (about 7 months behind AMD - go underdogs!).

AMD meanwhile, had reduced their manufacturing process in early 1999 from 0.25 micron to 0.18 micron, resulting in a smaller die size to fit the same number of transistors, and therefore less heat for the same performance - this meant they were able to increase clock speeds by about 100 MHz (K6-III+ runs at a whopping 550 MHz compared to the K6-II which maxed out at 450 MHz).

Wrap-Up

Agree or disagree with anything you've read here? Feel free to provide me with feedback! This article is about raw real world performance and not so much about synthetic benchmarking, and I've tried to be impartial in my decision-making. I've collected over 100 CPUs over the years, most of which still work - I do tend to favour AMD and Intel CPUs purely from a compatibility point of view (AMD's microprocessors are supported as slot-in replacements for Intel chips on many boards, while Cyrix/IBM/TI chips require a motherboard to specifically support its features), though the Cyrix chips are fascinating and I do run those from time to time in my retro builds!

Do let me know what you run in your retro PC build(s) and why!